Using the split and awk commands to chunk and process a massive text file (~4.5G)

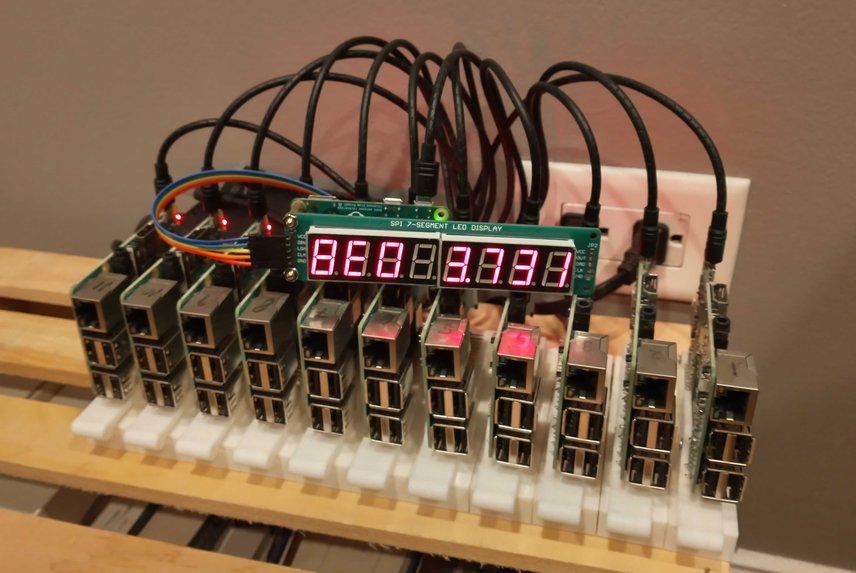

Linux command utilities are fast, like really, really fast. The split , sed , and awk commands are the most efficient ways of parsing large text files that I've found. I'm building a beowulf cluster of Raspberry Pi's for a side project and it's hit a bottleneck that's slowing the system down significantly. In this cluster each worker node polls the control server for a job file. The control server pulls a few lines directly out of a massive text file for the node to work on. The control server was getting bogged down by the number of requests because I was pulling these lines out on demand when fetching the job. When I first wrote the control script I used PHP's SplFileObject to pull lines out of the file to serve to the worker node.

<?php

$file = new SplFileObject(JOB_FILE);

$file->seek($start);

$job = '';

for ($i=0; $i<JOB_SIZE; $i++) {

$current = $file->current();

$job .= rtrim(trim($current), "\\") . PHP_EOL;

$file->next();

}

// Spit out the job

header('Content-type: text/plain');

echo $job;

It was a quick and easy to write script that got the job done. But this code needs to be efficient or it will get expensive since it's in the cloud. To keep the cost of the project as close to zero as possible this needs to be much more efficient. The size of the source file and the number of requests means that I need a much larger server to keep the cluster from idling waiting for jobs to work on than what's really necessary.

A solution is to split the massive text file up into job files and just serve those. The worker gets the URL to a job file hosted on AWS S3 and pulls it from there. This will bypass the server for serving the job files, the only thing the control server needs to do is manage delegating the jobs.

This solution means I need to split a very large text file (~4.5G) up into smaller pieces; adding to the complication, each line of the file had an extra trailing slash and a random amount of whitespace that needs to be trimmed along with any bordering whitespace.

My first attempt was to use PHP again, I just copied the code that pulled lines with the SplFileObject and thought I could loop through the file and wait. When it started the script was pretty fast and it looked like it would need about an hour or three. Not a big deal since this is a one time job and I could just leave it on for a bit. After about an hour it became clear that running the job was taking longer the deeper into the file it got, if I waited it could take about a day and half to finish. After looking into alternatives I settled on using split and awk which ended up only taking a few minutes to process the file.

Formatting the lines.

awk '{sub(/(\s*)\\(\s*)$/, ""}1' big.file > formatted.big.file

The awk command (abbreviated for its creators "Aho, Weinberger, and Kernighan") is a powerful and fast program. It let's you manipulate a data stream easily. For this task it's a good fit, I can stream the contents of the big file and format each line quickly, with awk, the output will get sent to a new file ready to use. When it's done running I'll have a source file ready to split into pieces.

The awk portion of this command looks complicated but it's pretty simple. The harder part to figure out is the regular expression it uses. This command uses the substitute method of awk to find a string and replace it. The syntax is basically...

awk '{sub(/regexp-search/, replacement}1' source_file

Regular expressions have a high learning curve but I strongly recommend getting the basics figured out. They're a powerful string searching option available to almost every language and can handle simple or very complex search logic.

For this file, I want to replace any trailing backslash and any whitespace on

either side of it, so I came up with this regular

expression: /(\s*?)\\(\s*?)$/ it will match any or no

whitespace on either side of a trailing backslash.

The parenthesis wraping (\s*?) is a capturing group.

The capture group isn't strictly necessary it can just

be \s* but it makes the regex easier to read if I have

to come back to it later. If you use regular expressions in code comment the

hell out of them, the developer to come back and read your code later will be

appreciative since they take awhile to

deconstruct. \s matches any whitespace character. The

quantifier * means match 0 or more of the preceding

character so \s* means match any amount of consecutive

whitespace. \\ matches a single backslash

and $ matches the end of the string.

Awk took ~90 seconds to run through the entire 4.5GB file and format each line. There might be ways to optimize the PHP script I was trying but this was _way_ better, quicker to write and faster to run.

Splitting the file up.

split --verbose -l600 --suffix-length 6 --numeric-suffixes=1 formatted.big.file j_

This is the easy part. The split command basically splits data up into files. It can split by bytes or lines which is perfect to chunk up the formatted source file. The command above specifies chunks of 600 lines and numeric suffixes. By default split will append 2 alphanumeric characters at the end of each split up file, for example aa, then ab. I need the files to match the job IDs in the code I've already written. Adding --numeric-suffixes=1 forces split to use numbers only and start at 1 (default is 0). I also need to specify the --suffix-length. The big file split up into 600 line chunks is going to yield ~650000 files so I need 6 characters to work with.

Why it's faster.

Using split and awk took about 3-4 minutes to process everything. The split and awk commands have been around for a long time, they're open source and have been reviewed/improved on for just as long. In my first attempt PHP is interacting with the entire file, even though I'm using the SplFileObject and the seek method it still much slower.

In the PHP script I'm doing all the things. Keeping track of the number of lines processed, processing each line, storing the results until I get to 600 lines then opening a file and dumping the contents and closing that file.

The awk program is doing the same processing but for a single line and then outputing the results. It's optimized C code that just streams through the source file. There's no overhead of counting and storing chunks of 600 lines and opening/closing files to push the results to.

Bash commands are fast in general, while awk and split aren't bash builtins they were much faster than PHP. I think if I tried writing this script in Python it would have been much faster but two shell commands was just easier to write.